Smackathon Project – Super Sing

Smackathon Project – Super Sing

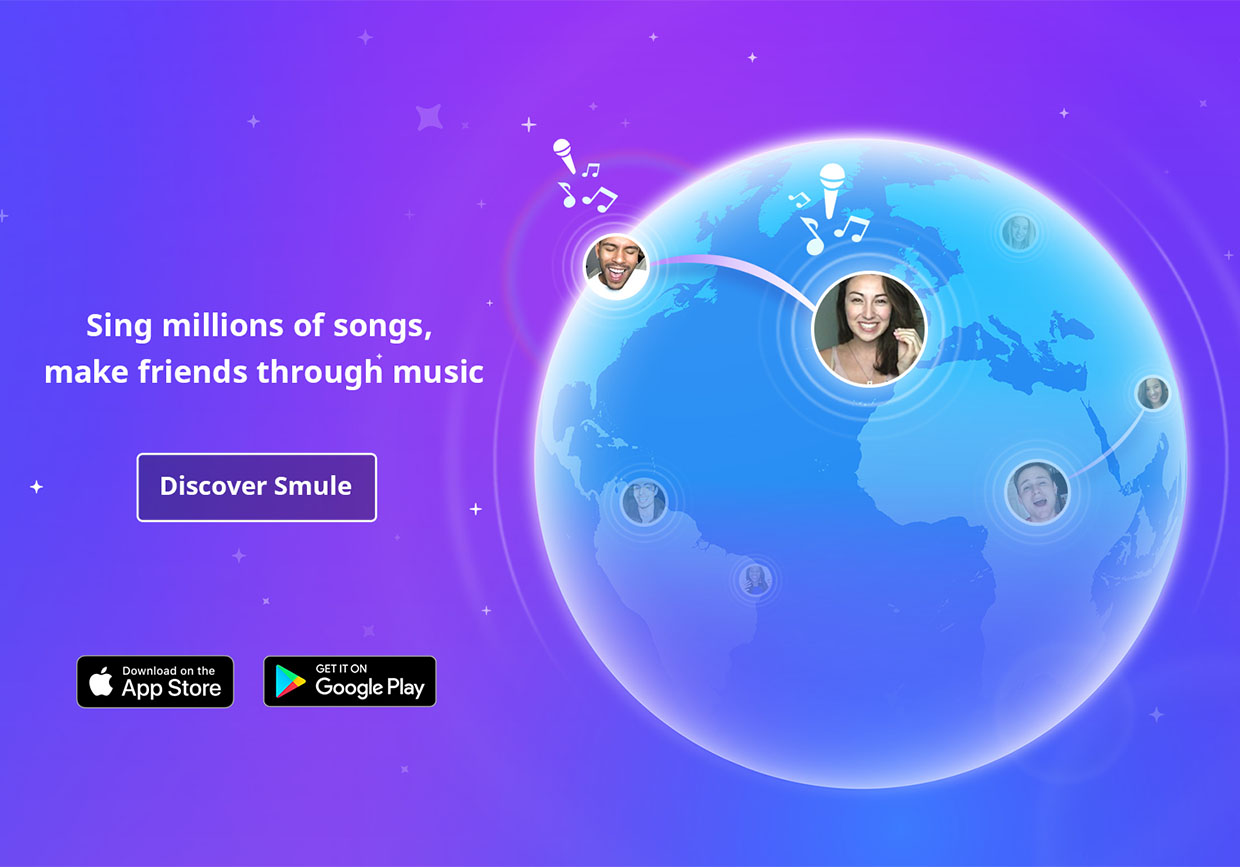

Super Sing is the smackathon project created by team “Born-in-the-90s,” the solo team consisting of me, Nick R. Utilizing Smule’s unique access to millions of karaoke recordings from our “Sing! Karaoke” application, Super Sing puts the user in complete control of a giant group singing experience. Watch the demo above and read on to learn more!

An Overview

The demo above shows a Super Sing performance of Call Me Maybe, consisting of 100+ top singers from the Sing! Karaoke application. Each vertical line represents a single vocal track (vocals only), ie. a single person singing somewhere in the world. In this particular demo, the mouse is moved around near the bottom of the screen to dynamically switch between individual singers (or by placing the mouse between two lines, a duet of singers). By raising the mouse towards the top of the screen more singers are dynamically pulled into the performance, seamlessly blended together without audio clipping or distortion. At the very top of the screen, all 100+ singers are singing together in a full-on Super Sing performance.

Note, there is also a Leap Motion compatible mode in the application, which allows complete control of the Super Sing performance just by moving your fingers in the front of the computer in 3D space. Perhaps I can upload a video of this interaction in the future.

The Details

As with all the hackathon projects, Super Sing was put together from start to finish in under 48 hours. To start, I used code from the Sing! Karaoke iPhone application itself to download 75 of the top vocal performances of Call Me Maybe (each of which can consist of a single singer or multiple singers, resulting in 100+ unique singers). I then used Apple’s CoreAudio programming framework to write a simple audio graph that could dynamically mix all the vocal tracks together with the background. To achieve this, all the vocal tracks are streaming together at all times, with most of them at 0 volume. Then, based on the mouse location on screen, the volume of vocal tracks are raised and lowered, shifting in real time between individual performances, group performances, and blending possibly all 100 together. The background track for the song is always running at full volume behind all these vocal tracks to create the final sound you hear being dynamically generated.

Once I had the audio working to my liking, I turned my attention to graphics. At heart I am truly a graphics programmer, and love nothing more in the field of computer science than real-time graphics and simulation. In the spirit of the hackathon, I used this opportunity to try a new graphics approach, and built the entire application on the GPU (graphic processing unit). I won’t bore you with the all details of GPU programming and the benefits it provides, but I urge you to Google it if you have the interest. Most modern day applications use the GPU to speed up complex graphics tasks in conjunction with the traditional CPU. By generating the graphics for my application entirely on the GPU, I give the CPU complete freedom to focus solely on the complicated audio rendering. This is what allows streaming 100+ vocal tracks simultaneously while also displaying sophisticated, colorful, and dynamically alive graphics.

Retrospective

All in all, I’m extremely happy with how Super Sing turned out. The graphics completely realized my vision for the user interface, and the audio mixing ended up sounding much better than I expected.